Introduction to spOccupancy

Jeffrey W. Doser, Andrew O. Finley, Marc Kéry, Elise F. Zipkin

2022 (last update: December 13, 2024)

Source:vignettes/modelFitting.Rmd

modelFitting.RmdIntroduction

This vignette provides worked examples and explanations for fitting

single-species, multi-species, and integrated occupancy models in the

spOccupancy R package. We will provide step by step

examples on how to fit the following models:

- Occupancy model using

PGOcc(). - Spatial occupancy model using

spPGOcc(). - Multi-species occupancy model using

msPGOcc(). - Spatial multi-species occupancy model using

spMsPGOcc(). - Integrated occupancy model using

intPGOcc(). - Spatial integrated occupancy model using

spIntPGOcc().

We fit all occupancy models using Pólya-Gamma data augmentation (Polson, Scott, and Windle 2013) for

computational efficiency (Pólya-Gamma

is where the PG comes from in all spOccupancy

model fitting function names). In this vignette, we will provide a brief

description of each model, with full statistical details provided in a

separate MCMC

sampler vignette. We will also show how spOccupancy

provides functions for posterior predictive checks as a Goodness of Fit

assessment, model comparison and assessment using the Widely Applicable

Information Criterion (WAIC), k-fold cross-validation, and out of sample

predictions using standard R helper functions (e.g.,

predict()).

Note that this vignette does not detail all spOccupancy

functionality, and rather just details the core functionality presented

in the original implementation of the package. Additional functionality,

and associated vignettes, include:

- Improved spatial multi-species occupancy models that account for residual species correlations

- Multi-season occupancy models

- Spatially-varying coefficient occupancy models

- Integrated multi-species occupancy models

To get started, we load the spOccupancy package, as well

as the coda package, which we will use for some MCMC

summary and diagnostics. We will also use the stars and

ggplot2 packages to create some basic plots of our results.

We then set a seed so you can reproduce the same results as we do.

Example data set: Foliage-gleaning birds at Hubbard Brook

As an example data set throughout this vignette, we will use data

from twelve foliage-gleaning bird species collected from point count

surveys at Hubbard Brook Experimental Forest (HBEF) in New Hampshire,

USA. Specific details on the data set, which is just a small subset from

a long-term survey, are available on the Hubbard

Brook website and Doser et al. (2022).

The data are provided as part of the spOccupancy package

and are loaded with data(hbef2015). Point count surveys

were conducted at 373 sites over three replicates, each of 10 minutes in

length and with a detection radius of 100m. In the data set provided

here, we converted these data to detection-nondetection data. Some sites

were not visited for all three replicates. Additional information on the

data set (including individual species in the data set) can be viewed in

the man page using help(hbef2015).

List of 4

$ y : num [1:12, 1:373, 1:3] 0 0 0 0 0 1 0 0 0 0 ...

..- attr(*, "dimnames")=List of 3

.. ..$ : chr [1:12] "AMRE" "BAWW" "BHVI" "BLBW" ...

.. ..$ : chr [1:373] "1" "2" "3" "4" ...

.. ..$ : chr [1:3] "1" "2" "3"

$ occ.covs: num [1:373, 1] 475 494 546 587 588 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : NULL

.. ..$ : chr "Elevation"

$ det.covs:List of 2

..$ day: num [1:373, 1:3] 156 156 156 156 156 156 156 156 156 156 ...

..$ tod: num [1:373, 1:3] 330 346 369 386 409 425 447 463 482 499 ...

$ coords : num [1:373, 1:2] 280000 280000 280000 280001 280000 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:373] "1" "2" "3" "4" ...

.. ..$ : chr [1:2] "X" "Y"Thus, the object hbef2015 is a list comprised of the

detection-nondetection data (y), covariates on the

occurrence portion of the model (occ.covs), covariates on

the detection portion of the model (det.covs), and the

spatial coordinates of each site (coords) for use in

spatial occupancy models and in plotting. This list is in the exact

format required for input to spOccupancy model functions.

hbef2015 contains data on 12 species in the

three-dimensional array y, where the dimensions of

y correspond to species (12), sites (373), and replicates

(3). For single-species occupancy models in Section 2 and 3, we will

only use data on the charming Ovenbird (OVEN; Seiurus

aurocapilla), so we next subset the hbef2015 list to

only include data from OVEN in a new object ovenHBEF.

sp.names <- dimnames(hbef2015$y)[[1]]

ovenHBEF <- hbef2015

ovenHBEF$y <- ovenHBEF$y[sp.names == "OVEN", , ]

table(ovenHBEF$y) # Quick summary.

0 1

518 588 We see that OVEN is detected at a little over half of all site-replicate combinations.

Single-species occupancy models

Basic model description

Let \(z_j\) be the true presence (1) or absence (0) of a species at site \(j\), with \(j = 1, \dots, J\). For our OVEN example, \(J = 373\). Following the basic occupancy model (MacKenzie et al. 2002; Tyre et al. 2003), we assume this latent occurrence variable arises from a Bernoulli process following

\[\begin{equation} \begin{split} &z_j \sim \text{Bernoulli}(\psi_j), \\ &\text{logit}(\psi_j) = \boldsymbol{x}^{\top}_j\boldsymbol{\beta}, \end{split} \end{equation}\]

where \(\psi_j\) is the probability of occurrence at site \(j\), which is a function of site-specific covariates \(\boldsymbol{X}\) and a vector of regression coefficients (\(\boldsymbol{\beta}\)).

We do not directly observe \(z_j\), but rather we observe an imperfect representation of the latent occurrence process as a result of imperfect detection (i.e., the failure to detect a species at a site when it is truly present). Let \(y_{j, k}\) be the observed detection (1) or nondetection (0) of a species of interest at site \(j\) during replicate \(k\) for each of \(k = 1, \dots, K_j\) replicates. Note that the number of replicates, \(K_j\), can vary by site and in practical applications will often be equal to 1 for a subset of sites (i.e., some sites will have no replicate surveys). For our OVEN example, the maximum value of \(K_j\) is three. We envision the detection-nondetection data as arising from a Bernoulli process conditional on the true latent occurrence process:

\[\begin{equation} \begin{split} &y_{j, k} \sim \text{Bernoulli}(p_{j, k}z_j), \\ &\text{logit}(p_{j, k}) = \boldsymbol{v}^{\top}_{j, k}\boldsymbol{\alpha}, \end{split} \end{equation}\]

where \(p_{j, k}\) is the probability of detecting a species at site \(j\) during replicate \(k\) (given it is present at site \(j\)), which is a function of site and replicate specific covariates \(\boldsymbol{V}\) and a vector of regression coefficients (\(\boldsymbol{\alpha}\)).

To complete the Bayesian specification of the model, we assign multivariate normal priors for the occurrence (\(\boldsymbol{\beta}\)) and detection (\(\boldsymbol{\alpha}\)) regression coefficients. To yield an efficient implementation of the occupancy model using a logit link function, we use Pólya-Gamma data augmentation (Polson, Scott, and Windle 2013), which is described in depth in a separate MCMC sampler vignette.

Fitting single-species occupancy models with

PGOcc()

The PGOcc() function fits single-species occupancy

models using Pólya-Gamma latent variables, which makes it more efficient

than standard Bayesian implementations of occupancy models using a logit

link function (Polson, Scott, and Windle 2013;

Clark and Altwegg 2019). PGOcc() has the following

arguments:

PGOcc(occ.formula, det.formula, data, inits, priors, n.samples,

n.omp.threads = 1, verbose = TRUE, n.report = 100,

n.burn = round(.10 * n.samples), n.thin = 1, n.chains = 1,

k.fold, k.fold.threads = 1, k.fold.seed, k.fold.only = FALSE, ...)The first two arguments, occ.formula and

det.formula, use standard R model syntax to denote the

covariates to be included in the occurrence and detection portions of

the model, respectively. Only the right hand side of the formulas are

included. Random intercepts can be included in both the occurrence and

detection portions of the single-species occupancy model using

lme4 syntax (Bates et al.

2015). For example, to include a random intercept for different

observers in the detection portion of the model, we would include

(1 | observer) in the det.formula, where

observer indicates the specific observer for each data

point. The names of variables given in the formulas should correspond to

those found in data, which is a list consisting of the

following tags: y (detection-nondetection data),

occ.covs (occurrence covariates), det.covs

(detection covariates). y should be stored as a sites x

replicate matrix, occ.covs as a matrix or data frame with

site-specific covariate values, and det.covs as a list with

each list element corresponding to a covariate to include in the

detection portion of the model. Covariates on detection can vary by site

and/or survey, and so these covariates may be specified as a site by

survey matrix for survey-level covariates or as a one-dimensional vector

for survey level covariates. The ovenHBEF list is already

in the required format. Here we will model OVEN occurrence as a function

of linear and quadratic elevation and will include three observational

covariates (linear and quadratic day of survey, time of day of survey)

on the detection portion of the model. We standardize all covariates by

using the scale() function in our model specification, and

use the I() function to specify quadratic effects:

oven.occ.formula <- ~ scale(Elevation) + I(scale(Elevation)^2)

oven.det.formula <- ~ scale(day) + scale(tod) + I(scale(day)^2)

# Check out the format of ovenHBEF

str(ovenHBEF)List of 4

$ y : num [1:373, 1:3] 1 1 0 1 0 0 1 0 1 1 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:373] "1" "2" "3" "4" ...

.. ..$ : chr [1:3] "1" "2" "3"

$ occ.covs: num [1:373, 1] 475 494 546 587 588 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : NULL

.. ..$ : chr "Elevation"

$ det.covs:List of 2

..$ day: num [1:373, 1:3] 156 156 156 156 156 156 156 156 156 156 ...

..$ tod: num [1:373, 1:3] 330 346 369 386 409 425 447 463 482 499 ...

$ coords : num [1:373, 1:2] 280000 280000 280000 280001 280000 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:373] "1" "2" "3" "4" ...

.. ..$ : chr [1:2] "X" "Y"Next, we specify the initial values for the MCMC sampler in

inits. PGOcc() (and all other

spOccupancy model fitting functions) will set initial

values by default, but here we will do this explicitly, since in more

complicated cases setting initial values close to the presumed solutions

can be vital for success of an MCMC-based analysis. For all models

described in this vignette (in particular the non-spatial models),

choice of the initial values is largely inconsequential, with the

exception being that specifying initial values close to the presumed

solutions can decrease the amount of samples you need to run to arrive

at convergence of the MCMC chains. Thus, when first running a model in

spOccupancy, we recommend fitting the model using the

default initial values that spOccupancy provides, which are

based on the prior distributions. After running the model for a

reasonable period, if you find the chains are taking a long time to

reach convergence, you then may wish to set the initial values to the

mean estimates of the parameters from the initial model fit, as this

will likely help reduce the amount of time you need to run the

model.

The default initial values for occurrence and detection regression

coefficients (including the intercepts) are random values from the prior

distributions adopted in the model, while the default initial values for

the latent occurrence effects are set to 1 if the species was observed

at a site and 0 otherwise. Initial values are specified in a list with

the following tags: z (latent occurrence values),

alpha (detection intercept and regression coefficients),

and beta (occurrence intercept and regression

coefficients). Below we set all initial values of the regression

coefficients to 0, and set initial values for z based on

the detection-nondetection data matrix. For the occurrence

(beta) and detection (alpha) regression

coefficients, the initial values are passed either as a vector of length

equal to the number of estimated parameters (including an intercept, and

in the order specified in the model formula), or as a single value if

setting the same initial value for all parameters (including the

intercept). Below we take the latter approach. To specify the initial

values for the latent occurrence at each site (z), we must

ensure we set the value to 1 at all sites where OVEN was detected at

least once, because if we detect OVEN at a site then the value of

z is 1 with complete certainty (under the assumption of the

model that there are no false positives). If the initial value for

z is set to 0 at one or more sites when the species was

detected, the occupancy model will fail. spOccupancy will

provide a clear error message if the supplied initial values for

z are invalid. Below we use the raw detection-nondetection

data and the apply() function to set the initial values to

1 if OVEN was detected at that site and 0 otherwise.

# Format with explicit specification of inits for alpha and beta

# with four detection parameters and three occurrence parameters

# (including the intercept).

oven.inits <- list(alpha = c(0, 0, 0, 0),

beta = c(0, 0, 0),

z = apply(ovenHBEF$y, 1, max, na.rm = TRUE))

# Format with abbreviated specification of inits for alpha and beta.

oven.inits <- list(alpha = 0,

beta = 0,

z = apply(ovenHBEF$y, 1, max, na.rm = TRUE))We next specify the priors for the occurrence and detection

regression coefficients. The Pólya-Gamma data augmentation algorithm

employed by spOccupancy assumes normal priors for both the

detection and occurrence regression coefficients. These priors are

specified in a list with tags beta.normal for occurrence

and alpha.normal for detection parameters (including

intercepts). Each list element is then itself a list, with the first

element of the list consisting of the hypermeans for each coefficient

and the second element of the list consisting of the hypervariances for

each coefficient. Alternatively, the hypermean and hypervariances can be

specified as a single value if the same prior is used for all regression

coefficients. By default, spOccupancy will set the

hypermeans to 0 and the hypervariances to 2.72, which corresponds to a

relatively flat prior on the probability scale (0, 1; Lunn et al. (2013)). Broms, Hooten, and Fitzpatrick (2016), Northrup and Gerber (2018), and others show such

priors are an adequate choice when the goal is to specify relatively

non-informative priors. We will use these default priors here, but we

specify them explicitly below for clarity

oven.priors <- list(alpha.normal = list(mean = 0, var = 2.72),

beta.normal = list(mean = 0, var = 2.72))Our last step is to specify the number of samples to produce with the

MCMC algorithm (n.samples), the length of burn-in

(n.burn), the rate at which we want to thin the posterior

samples (n.thin), and the number of MCMC chains to run

(n.chains). Note that currently spOccupancy

runs multiple chains sequentially and does not allow chains to be run

simultaneously in parallel across multiple threads. Instead, we allow

for within-chain parallelization using the n.omp.threads

argument. We can set n.omp.threads to a number greater than

1 and smaller than the number of threads on the computer you are using.

Generally, setting n.omp.threads > 1 will not result in

decreased run times for non-spatial models in spOccupancy,

but can substantially decrease run time when fitting spatial models

(Finley, Datta, and Banerjee 2020). Here

we set n.omp.threads = 1.

For a simple single-species occupancy model, we shouldn’t need too many samples and will only need a small amount of burn-in and thinning. We will run the model using three chains to assess convergence using the Gelman-Rubin diagnostic (Rhat; Brooks and Gelman (1998)).

n.samples <- 5000

n.burn <- 3000

n.thin <- 2

n.chains <- 3We are now nearly set to run the occupancy model. The

verbose argument is a logical value indicating whether or

not MCMC sampler progress is reported to the screen. If

verbose = TRUE, sampler progress is reported after every

multiple of the specified number of iterations in the

n.report argument. We set verbose = TRUE and

n.report = 1000 to report progress after every 1000th MCMC

iteration. The last four arguments to PGOcc()

(k.fold, k.fold.threads,

k.fold.seed, k.fold.only) are used for

performing k-fold cross-validation for model assessment, which we will

illustrate in a subsequent section. For now, we won’t specify the

arguments, which will tell PGOcc() not to perform k-fold

cross-validation.

out <- PGOcc(occ.formula = oven.occ.formula,

det.formula = oven.det.formula,

data = ovenHBEF,

inits = oven.inits,

n.samples = n.samples,

priors = oven.priors,

n.omp.threads = 1,

verbose = TRUE,

n.report = 1000,

n.burn = n.burn,

n.thin = n.thin,

n.chains = n.chains)----------------------------------------

Preparing to run the model

----------------------------------------

----------------------------------------

Model description

----------------------------------------

Occupancy model with Polya-Gamma latent

variable fit with 373 sites.

Samples per Chain: 5000

Burn-in: 3000

Thinning Rate: 2

Number of Chains: 3

Total Posterior Samples: 3000

Source compiled with OpenMP support and model fit using 1 thread(s).

----------------------------------------

Chain 1

----------------------------------------

Sampling ...

Sampled: 1000 of 5000, 20.00%

-------------------------------------------------

Sampled: 2000 of 5000, 40.00%

-------------------------------------------------

Sampled: 3000 of 5000, 60.00%

-------------------------------------------------

Sampled: 4000 of 5000, 80.00%

-------------------------------------------------

Sampled: 5000 of 5000, 100.00%

----------------------------------------

Chain 2

----------------------------------------

Sampling ...

Sampled: 1000 of 5000, 20.00%

-------------------------------------------------

Sampled: 2000 of 5000, 40.00%

-------------------------------------------------

Sampled: 3000 of 5000, 60.00%

-------------------------------------------------

Sampled: 4000 of 5000, 80.00%

-------------------------------------------------

Sampled: 5000 of 5000, 100.00%

----------------------------------------

Chain 3

----------------------------------------

Sampling ...

Sampled: 1000 of 5000, 20.00%

-------------------------------------------------

Sampled: 2000 of 5000, 40.00%

-------------------------------------------------

Sampled: 3000 of 5000, 60.00%

-------------------------------------------------

Sampled: 4000 of 5000, 80.00%

-------------------------------------------------

Sampled: 5000 of 5000, 100.00%

names(out) # Look at the contents of the resulting object. [1] "rhat" "beta.samples" "alpha.samples" "z.samples"

[5] "psi.samples" "like.samples" "ESS" "X"

[9] "X.p" "X.re" "X.p.re" "y"

[13] "n.samples" "call" "n.post" "n.thin"

[17] "n.burn" "n.chains" "pRE" "psiRE"

[21] "run.time" PGOcc() returns a list of class PGOcc with

a suite of different objects, many of them being coda::mcmc

objects of posterior samples. Note the “Preparing to run the model”

printed section doesn’t have any information shown in it.

spOccupancy model fitting functions will present messages

when preparing the data for the model in this section, or will print out

the default priors or initial values used when they are not specified in

the function call. Here we specified everything explicitly so no

information was reported.

For a concise yet informative summary of the regression parameters

and convergence of the MCMC chains, we can use summary() on

the resulting PGOcc() object.

summary(out)

Call:

PGOcc(occ.formula = oven.occ.formula, det.formula = oven.det.formula,

data = ovenHBEF, inits = oven.inits, priors = oven.priors,

n.samples = n.samples, n.omp.threads = 1, verbose = TRUE,

n.report = 1000, n.burn = n.burn, n.thin = n.thin, n.chains = n.chains)

Samples per Chain: 5000

Burn-in: 3000

Thinning Rate: 2

Number of Chains: 3

Total Posterior Samples: 3000

Run Time (min): 0.073

Occurrence (logit scale):

Mean SD 2.5% 50% 97.5% Rhat ESS

(Intercept) 2.1370 0.2728 1.6490 2.1188 2.7079 1.0001 834

scale(Elevation) -1.7024 0.2983 -2.4095 -1.6701 -1.2336 1.0100 402

I(scale(Elevation)^2) -0.3992 0.2038 -0.7687 -0.4103 0.0481 1.0030 645

Detection (logit scale):

Mean SD 2.5% 50% 97.5% Rhat ESS

(Intercept) 0.7942 0.1269 0.5394 0.7940 1.0407 1.0018 2694

scale(day) -0.0904 0.0773 -0.2450 -0.0910 0.0587 1.0021 3814

scale(tod) -0.0460 0.0781 -0.2008 -0.0457 0.1030 0.9996 3000

I(scale(day)^2) 0.0238 0.0966 -0.1625 0.0231 0.2209 1.0053 3000Note that all coefficients are printed on the logit scale. We see

OVEN is fairly widespread (at the scale of the survey) in the forest

given the large intercept value, which translates to a value of 0.89 on

the probability scale (run plogis(2.13)). In addition, the

negative linear and quadratic terms for Elevation suggest

occurrence probability peaks at mid-elevations. On average, detection

probability is about 0.69.

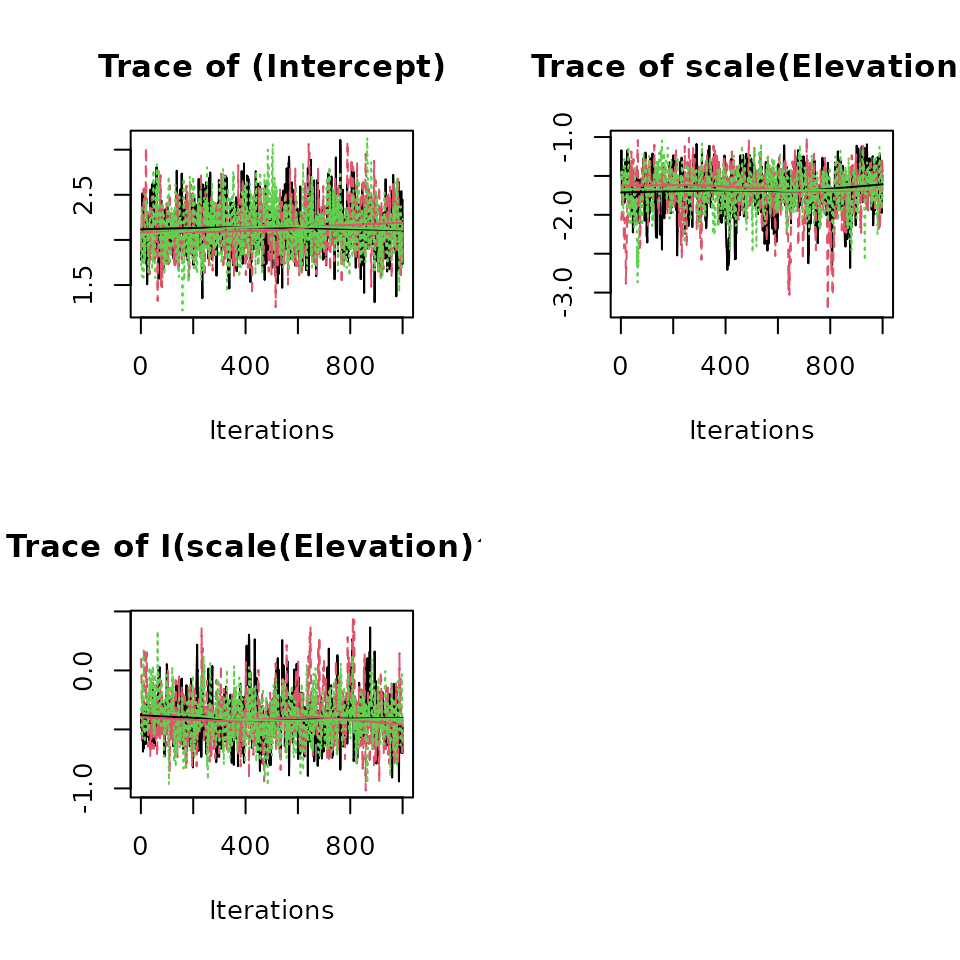

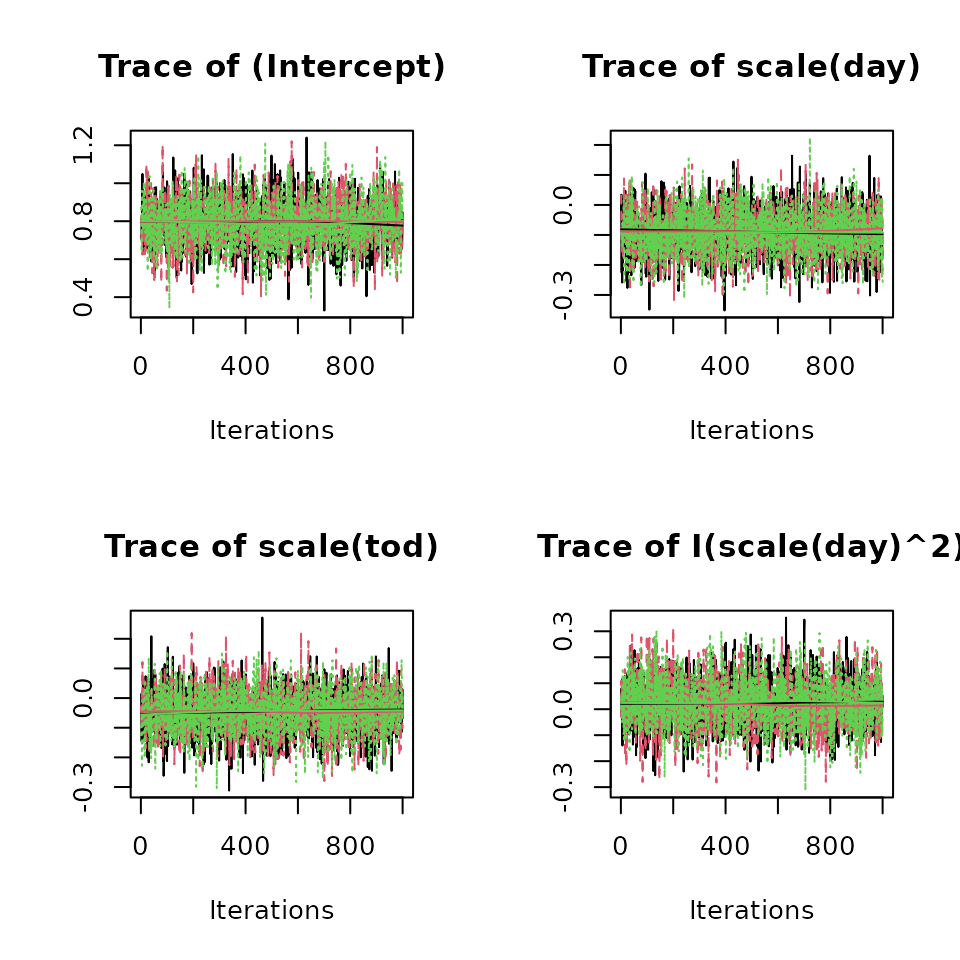

In principle, before we even look at the parameter summaries, we

ought to ensure that the MCMC chains have reached convergence. The

summary function also returns the Gelman-Rubin diagnostic

(Brooks and Gelman 1998) and the effective

samples size (ESS) of the posterior samples, which we can use to assess

convergence. Here we see all Rhat values are less than 1.1 and the ESS

values indicate adequate mixing of the MCMC chains. Additionally, we can

use the plot() function to plot traceplots of the

individual model parameters that are contained in the resulting

PGOcc object. The plot() function takes three

arguments: x (the model object), param (a

character string denoting the parameter name), and density

(logical value indicating whether to also plot the density of MCMC

samples along with the traceplot). See ?plot.PGOcc for more

details (similar functions exist for all spOccupancy model

objects).

plot(out, 'beta', density = FALSE) # Occupancy parameters.

plot(out, 'alpha', density = FALSE) # Detection parameters.

Posterior predictive checks

The function ppcOcc() performs a posterior predictive

check on all spOccupancy model objects as a Goodness of Fit

(GoF) assessment. The fundamental idea of GoF testing is that a good

model should generate data that closely align with the observed data. If

there are drastic differences in the true data from the model generated

data, our model is likely not very useful (Hobbs

and Hooten 2015). GoF assessments are more complicated using

binary data, like detection-nondetection used in occupancy models, as

standard approaches are not valid assessments for the raw data in binary

response models such as occupancy models (Broms,

Hooten, and Fitzpatrick 2016; McCullagh and Nelder 2019). Thus,

any approach to assess model fit for detection-nondetection data must

bin, or aggregate, the raw values in some manner, and then perform a

model fit assessment on the binned values. There are numerous ways we

could envision binning the raw detection-nondetection values (MacKenzie and Bailey 2004; Kéry and Royle

2016). In spOccupancy, a posterior predictive check

broadly takes the following steps:

- Fit the model using any of the model-fitting functions (here

PGOcc()), which generates replicated values for all detection-nondetection data points. - Bin both the actual and the replicated detection-nondetection data in a suitable manner, such as by site or replicate (MacKenzie and Bailey 2004).

- Compute a fit statistic on both the actual data and also on the model-generated ‘replicate data’.

- Compare the fit statistics for the true data and replicate data. If they are widely different, this suggests a lack of fit of the model to the actual data set at hand.

To perform a posterior predictive check, we send the resulting

PGOcc model object as input to the ppcOcc()

function, along with a fit statistic (fit.stat) and a

numeric value indicating how to group, or bin, the data

(group). Currently supported fit statistics include the

Freeman-Tukey statistic and the Chi-Squared statistic

(freeman-tukey or chi-squared, respectively,

Kéry and Royle (2016)). Currently,

ppcOcc() allows the user to group the data by row (site;

group = 1) or column (replicate; group = 2).

ppcOcc() will then return a set of posterior samples for

the fit statistic (or discrepancy measure) using the actual data

(fit.y) and model generated replicate data set

(fit.y.rep), summed across all data points in the chosen

manner. We generally recommend performing a posterior predictive check

when grouping data both across sites (group = 1) as well as

across replicates (group = 2), as they may reveal (or fail

to reveal) different inadequacies of the model for the specific data set

at hand (Kéry and Royle 2016). In

particular, binning the data across sites (group = 1) may

help reveal whether the model fails to adequately represent variation in

occurrence and detection probability across space, while binning the

data across replicates (group = 2) may help reveal whether

the model fails to adequately represent variation in detection

probability across the different replicate surveys. Similarly, we

suggest exploring posterior predictive checks using both the

Freeman-Tukey Statistic as well as the Chi-Squared statistic. Generally,

the more ways we explore the fit of our model to the data, the more

confidence we have that our model is adequately representing the data

and we can draw reasonable conclusions from it. By performing posterior

predictive checks with the two fit statistics and two ways of grouping

the data, spOccupancy provides us with four different

measures that we can use to assess the validity of our model. Throughout

this vignette, we will display different types of posterior predictive

checks using different combinations of the fit statistic and grouping

approach. In a more complete analysis, we would run all four types of

posterior predictive checks for each model we fit as a more complete GoF

assessment.

The resulting values from a call to ppcOcc() can be used

with the summary() function to generate a Bayesian p-value,

which is the probability, under the fitted model, to obtain a value of

the fit statistic that is more extreme (i.e., larger) than the one

observed, i.e., for the actual data. Bayesian p-values are sensitive to

individual values, so we should also explore the discrepancy measures

for each “grouped” data point. ppcOcc() returns a matrix of

posterior quantiles for the fit statistic for both the observed

(fit.y.group.quants) and model generated, replicate data

(fit.y.rep.group.quants) for each “grouped” data point.

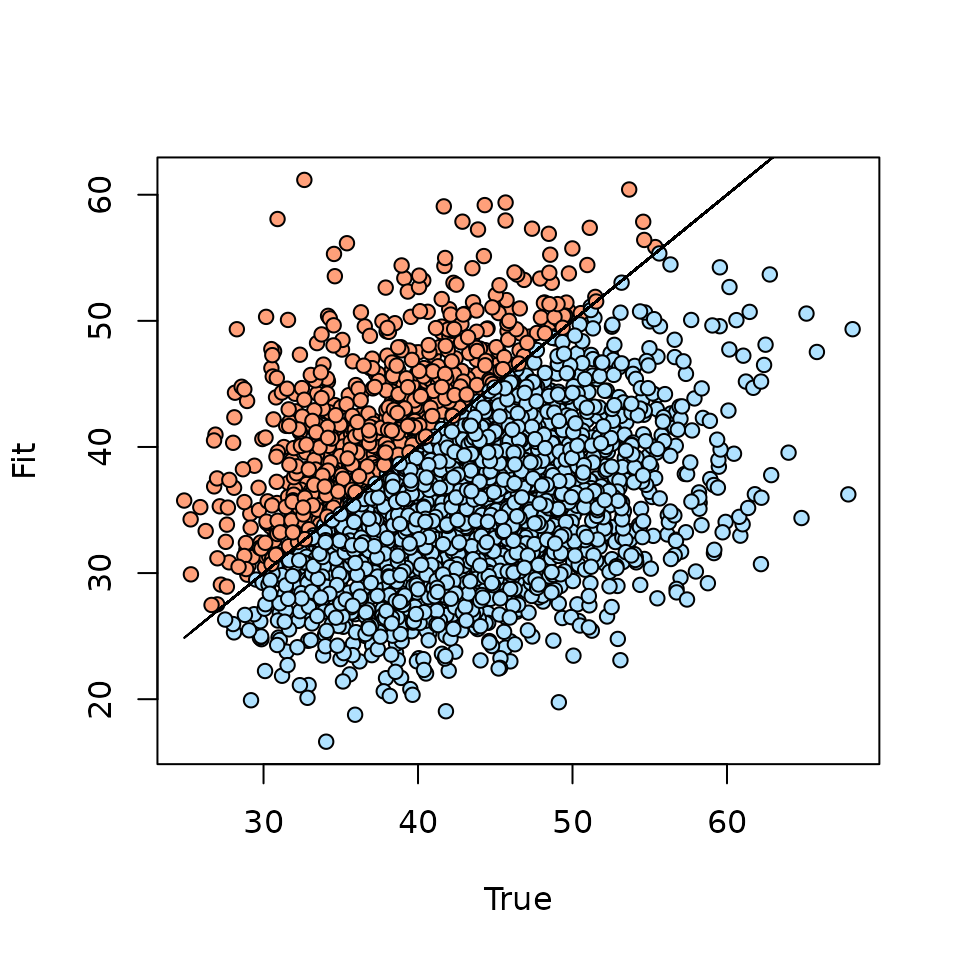

We next perform a posterior predictive check using the Freeman-Tukey

statistic grouping the data by sites. We summarize the posterior

predictive check with the summary() function, which reports

a Bayesian p-value. A Bayesian p-value that hovers around 0.5 indicates

adequate model fit, while values less than 0.1 or greater than 0.9

suggest our model does not fit the data well (Hobbs and Hooten 2015). As always with a

simulation-based analysis using MCMC, you will get numerically slightly

different values.

Call:

ppcOcc(object = out, fit.stat = "freeman-tukey", group = 1)

Samples per Chain: 5000

Burn-in: 3000

Thinning Rate: 2

Number of Chains: 3

Total Posterior Samples: 3000

Bayesian p-value: 0.2403

Fit statistic: freeman-tukey The Bayesian p-value is the proportion of posterior samples of the fit statistic of the model generated data that are greater than the corresponding fit statistic of the true data, summed across all “grouped” data points. We can create a visual representation of the Bayesian p-value as follows (Kéry and Royle 2016):

ppc.df <- data.frame(fit = ppc.out$fit.y,

fit.rep = ppc.out$fit.y.rep,

color = 'lightskyblue1')

ppc.df$color[ppc.df$fit.rep > ppc.df$fit] <- 'lightsalmon'

plot(ppc.df$fit, ppc.df$fit.rep, bg = ppc.df$color, pch = 21,

ylab = 'Fit', xlab = 'True')

lines(ppc.df$fit, ppc.df$fit, col = 'black')

Our Bayesian p-value is greater than 0.1 indicating no lack of fit,

although the above plot shows most of the fit statistics are smaller for

the replicate data than the actual data set. Relying solely on the

Bayesian p-value as an assessment of model fit is not always a great

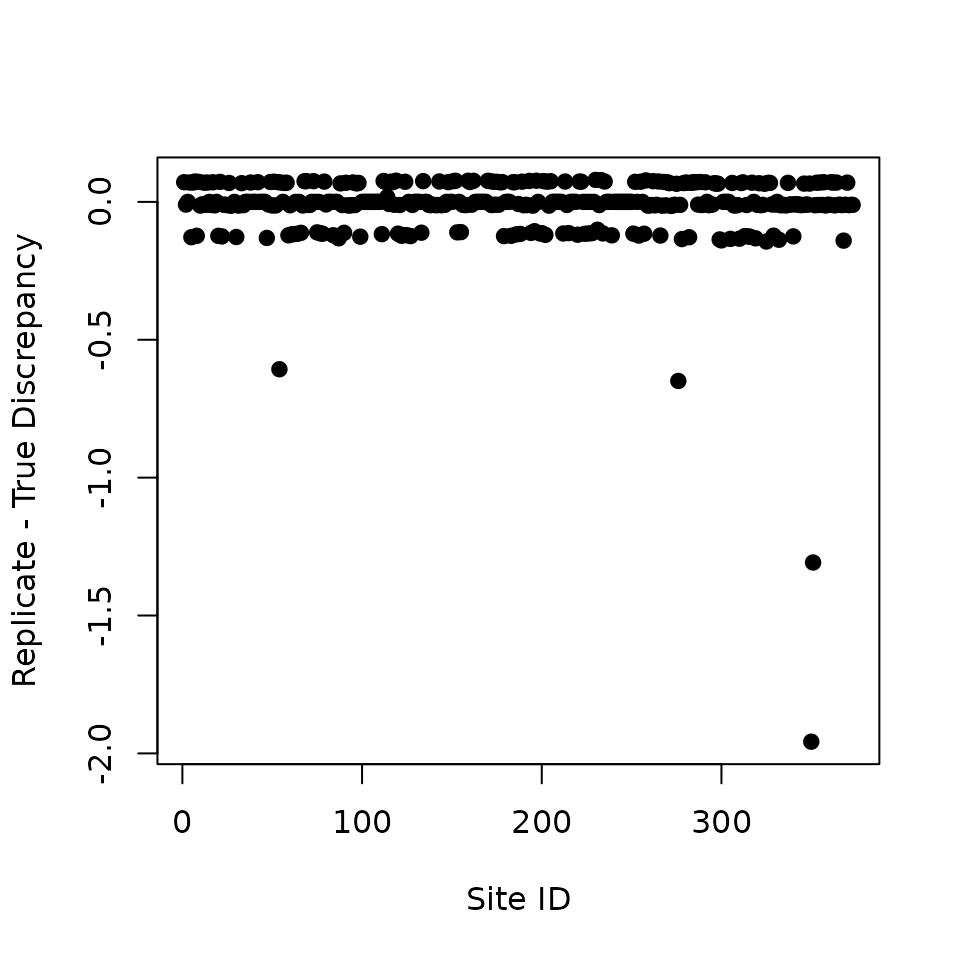

option, as individual data points can have an overbearing influence on

the resulting summary value. Instead of summing across all data points

for a single discrepancy measure, ppcOcc() also allows us

to explore discrepancy measures on a “grouped” point by point basis. The

resulting ppcOcc object will contain the objects

fit.y.group.quants and fit.y.rep.group.quants,

which contain quantiles of the posterior distributions for the

discrepancy measures of each grouped data point. Below we plot the

difference in the discrepancy measure between the replicate and actual

data across each of the sites.

diff.fit <- ppc.out$fit.y.rep.group.quants[3, ] - ppc.out$fit.y.group.quants[3, ]

plot(diff.fit, pch = 19, xlab = 'Site ID', ylab = 'Replicate - True Discrepancy')

We see there are four sites that contribute to the overall GoF measure far more than in proportion to their number, i.e., whose discrepancy measure for the actual data is much larger than the corresponding discrepancy for the replicate data. Here we will ignore this, but in a real analysis it might be very insightful to further explore these sites to see what could explain this pattern (e.g., are the sites close together in space? Could the data be the result of erroneous recording, or of extraordinary local habitat that is not adequately described by the occurrence covariates?). Although rarely done, closer investigations of outlying values in statistical analyses may sometimes teach one more than mere acceptance of a fitting model.

Model selection using WAIC and k-fold cross-validation

Posterior predictive checks allow us to assess how well our model fits the data, but they are not very useful if we want to compare multiple competing models and ultimately select a final model based on some criterion. Bayesian model selection is very much a constantly changing field. See Hooten and Hobbs (2015) for an accessible overview of Bayesian model selection for ecologists.

For Bayesian hierarchical models like occupancy models, the most common Bayesian model selection criterion, the deviance information criterion or DIC, is not applicable (Hooten and Hobbs 2015). Instead, the Widely Applicable Information Criterion (Watanabe 2010) is recommended to compare a set of models and select the best-performing model for final analysis.

The WAIC is calculated for all spOccupancy model objects

using the function waicOcc(). We calculate the WAIC as

\[ \text{WAIC} = -2 \times (\text{elpd} - \text{pD}), \]

where elpd is the expected log point-wise predictive density and PD is the effective number of parameters. We calculate elpd by calculating the likelihood for each posterior sample, taking the mean of these likelihood values, taking the log of the mean of the likelihood values, and summing these values across all sites. We calculate the effective number of parameters by calculating the variance of the log likelihood for each site taken over all posterior samples, and then summing these values across all sites. See Appendix S1 from Broms, Hooten, and Fitzpatrick (2016) for more details.

We calculate the WAIC using waicOcc() for our OVEN model

below (as always, note some slight differences with your solutions due

to Monte Carlo error).

waicOcc(out) elpd pD WAIC

-632.960543 6.290149 1278.501382 For illustration, let’s next rerun the OVEN model, but this time we assume occurrence is constant across the HBEF, and subsequently compare the WAIC value to the full model

out.small <- PGOcc(occ.formula = ~ 1,

det.formula = oven.det.formula,

data = ovenHBEF,

inits = oven.inits,

n.samples = n.samples,

priors = oven.priors,

n.omp.threads = 1,

verbose = FALSE,

n.burn = n.burn,

n.thin = n.thin,

n.chains = n.chains)

waicOcc(out.small) elpd pD WAIC

-692.046183 4.932945 1393.958257 Smaller values of WAIC indicate models with better performance. We see the WAIC for the model with elevation is smaller than the intercept-only model, indicating elevation is an important predictor for OVEN occurrence in HBEF.

When focusing primarily on predictive performance, a k-fold

cross-validation (CV) approach is another attractive, though

computationally more intensive, alternative to compare a series of

models, especially since WAIC may not always be reliable for occupancy

models (Broms, Hooten, and Fitzpatrick

2016). In spOccupancy, k-fold cross-validation is

accomplished using the arguments k.fold,

k.fold.threads, and k.fold.seed in the model

fitting function. A k-fold cross validation approach requires fitting a

model \(k\) times, where each time the

model is fit using \(J / k\) data

points, where \(J\) is the total number

of sites surveyed at least once in the data set. Each time the model is

fit, it uses a different portion of the data and then predicts the

remaining \(J - J/k\) hold out values.

Because the data are not used to fit the model, CV yields true samples

from the posterior predictive distribution that we can use to assess the

predictive capacity of the model.

As a measure of out-of-sample predictive performance, we use the deviance as a scoring rule following Hooten and Hobbs (2015). For K-fold cross-validation, it is computed as

\[\begin{equation} -2 \sum_{k = 1}^K \text{log}\Bigg(\frac{\sum_{q = 1}^Q \text{Bernoulli}(\boldsymbol{y}_k \mid \boldsymbol{p}^{(q)}\boldsymbol{z}_k^{(q)})}{Q}\Bigg), \end{equation}\]

where \(\boldsymbol{p}^{(q)}\) and

\(\boldsymbol{z}_k^{(q)}\) are MCMC

samples of detection probability and latent occurrence, respectively,

arising from a model that is fit without the observations \(\boldsymbol{y}_k\), and \(Q\) is the total number of posterior

samples produced from the MCMC sampler. The -2 is used so that smaller

values indicate better model fit, which aligns with most information

criteria used for model assessment (like the WAIC implemented using

waicOcc()).

The three arguments in PGOcc() (k.fold,

k.fold.threads, k.fold.seed) control whether

or not k-fold cross validation is performed following the complete fit

of the model using the entire data set. The k.fold argument

indicates the number of \(k\) folds to

use for cross-validation. If k.fold is not specified,

cross-validation is not performed and k.fold.threads and

k.fold.seed are ignored. The k.fold.threads

argument indicates the number of threads to use for running the \(k\) models in parallel across multiple

threads. Parallel processing is accomplished using the R packages

foreach and doParallel. Specifying

k.fold.threads > 1 can substantially decrease run time

since it allows for models to be fit simultaneously on different threads

rather than sequentially. The k.fold.seed indicates the

seed used to randomly split the data into \(k\) groups. This is by default set to 100.

Lastly, the k.fold.only argument controls whether the full

model is run prior to performing cross-validation

(k.fold.only = FALSE, the default). If set to

TRUE, only k-fold cross-validation will be performed. This

can be useful when performing cross-validation after doing some initial

exploration with fitting different models with the full data set so that

you don’t have to rerun the full model again.

Below we refit the occupancy model with elevation (linear and

quadratic) as an occurrence predictor this time performing 4-fold

cross-validation. We set k.fold = 4 to perform 4-fold

cross-validation and k.fold.threads = 1 to run the model

using 1 thread. Normally we would set k.fold.threads = 4,

but using multiple threads leads to complications when compiling this

vignette, so we leave that to you to explore the computational

improvements of performing cross-validation across multiple cores.

out.k.fold <- PGOcc(occ.formula = oven.occ.formula,

det.formula = oven.det.formula,

data = ovenHBEF,

inits = oven.inits,

n.samples = n.samples,

priors = oven.priors,

n.omp.threads = 1,

verbose = TRUE,

n.report = 1000,

n.burn = n.burn,

n.thin = n.thin,

n.chains = n.chains,

k.fold = 4,

k.fold.threads = 1)----------------------------------------

Preparing to run the model

----------------------------------------

----------------------------------------

Model description

----------------------------------------

Occupancy model with Polya-Gamma latent

variable fit with 373 sites.

Samples per Chain: 5000

Burn-in: 3000

Thinning Rate: 2

Number of Chains: 3

Total Posterior Samples: 3000

Source compiled with OpenMP support and model fit using 1 thread(s).

----------------------------------------

Chain 1

----------------------------------------

Sampling ...

Sampled: 1000 of 5000, 20.00%

-------------------------------------------------

Sampled: 2000 of 5000, 40.00%

-------------------------------------------------

Sampled: 3000 of 5000, 60.00%

-------------------------------------------------

Sampled: 4000 of 5000, 80.00%

-------------------------------------------------

Sampled: 5000 of 5000, 100.00%

----------------------------------------

Chain 2

----------------------------------------

Sampling ...

Sampled: 1000 of 5000, 20.00%

-------------------------------------------------

Sampled: 2000 of 5000, 40.00%

-------------------------------------------------

Sampled: 3000 of 5000, 60.00%

-------------------------------------------------

Sampled: 4000 of 5000, 80.00%

-------------------------------------------------

Sampled: 5000 of 5000, 100.00%

----------------------------------------

Chain 3

----------------------------------------

Sampling ...

Sampled: 1000 of 5000, 20.00%

-------------------------------------------------

Sampled: 2000 of 5000, 40.00%

-------------------------------------------------

Sampled: 3000 of 5000, 60.00%

-------------------------------------------------

Sampled: 4000 of 5000, 80.00%

-------------------------------------------------

Sampled: 5000 of 5000, 100.00%

----------------------------------------

Cross-validation

----------------------------------------Performing 4-fold cross-validation using 1 thread(s).We subsequently refit the intercept only occupancy model, and compare the deviance metrics from the 4-fold cross-validation.

# Model fitting information is suppressed for space.

out.int.k.fold <- PGOcc(occ.formula = ~ 1,

det.formula = oven.det.formula,

data = ovenHBEF,

inits = oven.inits,

n.samples = n.samples,

priors = oven.priors,

n.omp.threads = 1,

verbose = FALSE,

n.report = 1000,

n.burn = n.burn,

n.thin = n.thin,

n.chains = n.chains,

k.fold = 4,

k.fold.threads = 1)The cross-validation metric (model deviance) is stored in the

k.fold.deviance tag of the resulting model object.

out.k.fold$k.fold.deviance # Larger model. [1] 1332.179

out.int.k.fold$k.fold.deviance # Intercept-only model.[1] 1533.212Similar to the results from the WAIC, CV also suggests that the model including elevation with a predictor outperforms the intercept only model.

Prediction

All model objects resulting from a call to spOccupancy

model-fitting functions can be used with predict() to

generate a series of posterior predictive samples at new locations,

given the values of all covariates used in the model fitting process.

The object hbefElev (which comes as part of the

spOccupancy package) contains elevation data at a 30x30m

resolution from the National Elevation Data set across the entire HBEF.

We load the data below

'data.frame': 46090 obs. of 3 variables:

$ val : num 914 916 918 920 922 ...

$ Easting : num 276273 276296 276318 276340 276363 ...

$ Northing: num 4871424 4871424 4871424 4871424 4871424 ...The column val contains the elevation values, while

Easting and Northing contain the spatial

coordinates that we will use for plotting. We can obtain posterior

predictive samples for the occurrence probabilities at these sites by

using the predict() function and our PGOcc

fitted model object. Given that we standardized the elevation values

when we fit the model, we need to standardize the elevation values for

prediction using the exact same values of the mean and standard

deviation of the elevation values used to fit the data.

elev.pred <- (hbefElev$val - mean(ovenHBEF$occ.covs[, 1])) / sd(ovenHBEF$occ.covs[, 1])

# These are the new intercept and covariate data.

X.0 <- cbind(1, elev.pred, elev.pred^2)

out.pred <- predict(out, X.0)For PGOcc objects, the predict function

takes two arguments: (1) the PGOcc fitted model object; and

(2) a matrix or data frame consisting of the design matrix for the

prediction locations (which must include and intercept if our model

contained one). The resulting object consists of posterior predictive

samples for the latent occurrence probabilities

(psi.0.samples) and latent occurrence values

(z.0.samples). The beauty of the Bayesian paradigm, and the

MCMC computing machinery, is that these predictions all have fully

propagated uncertainty. We can use these values to create plots of the

predicted mean occurrence values, as well as of their standard deviation

as a measure of the uncertainty in these predictions akin to a standard

error associated with maximum likelihood estimates. We could also

produce a map for the Bayesian credible interval length as an

alternative measure of prediction uncertainty or two maps, one showing

the lower and the other the upper limit of, say, a 95% credible

interval.

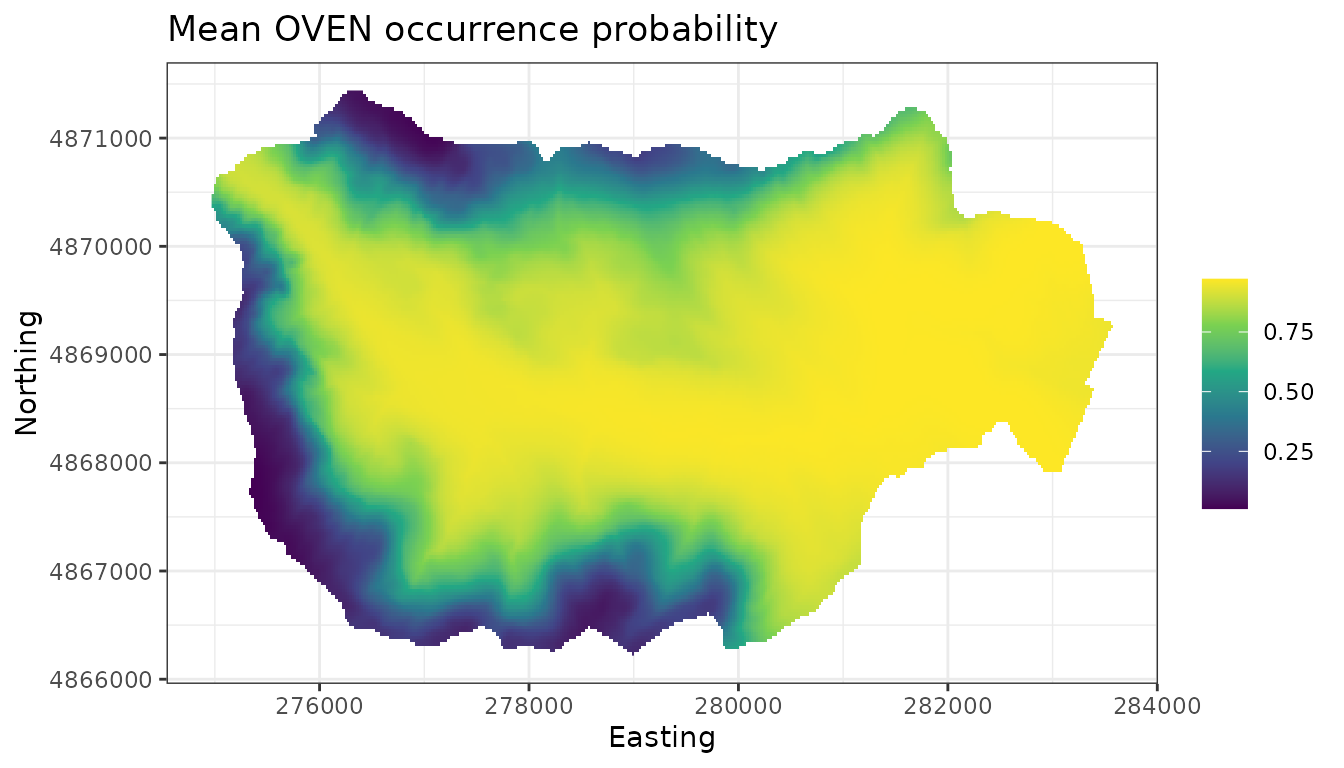

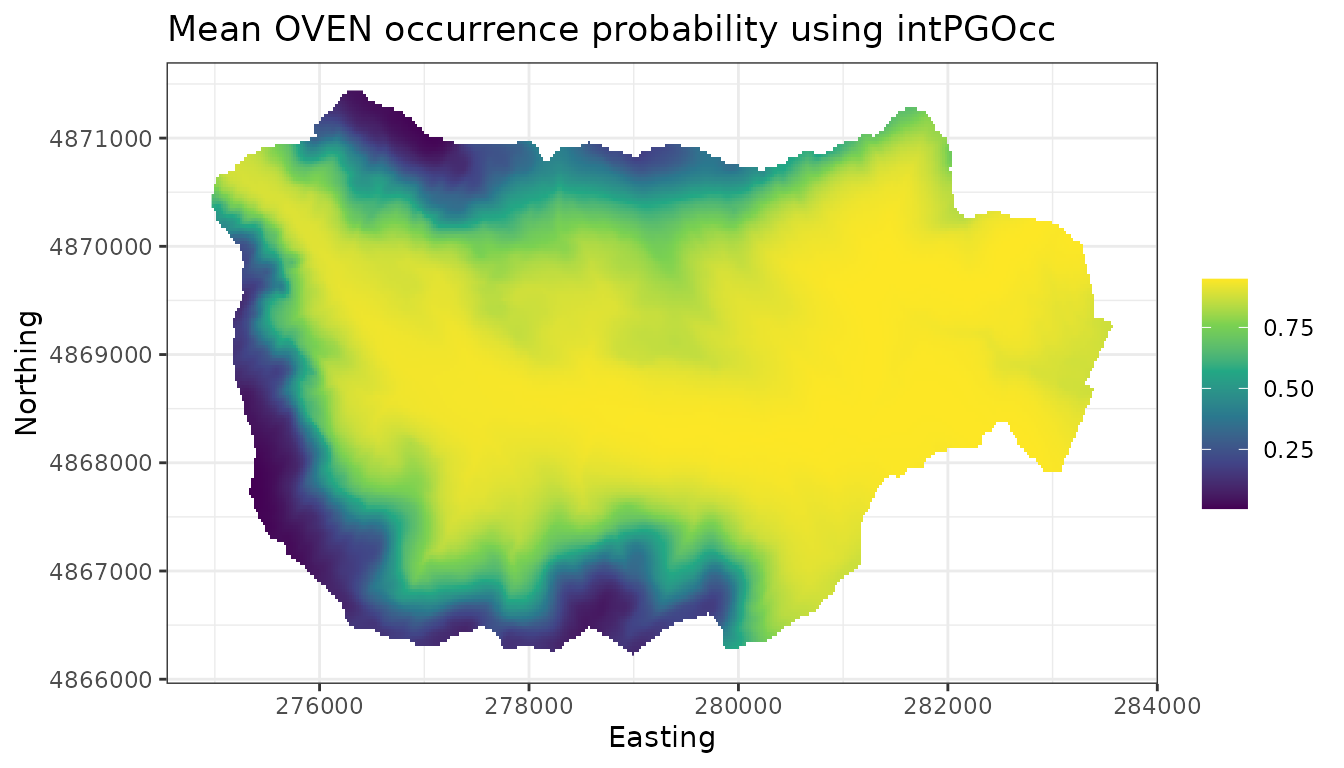

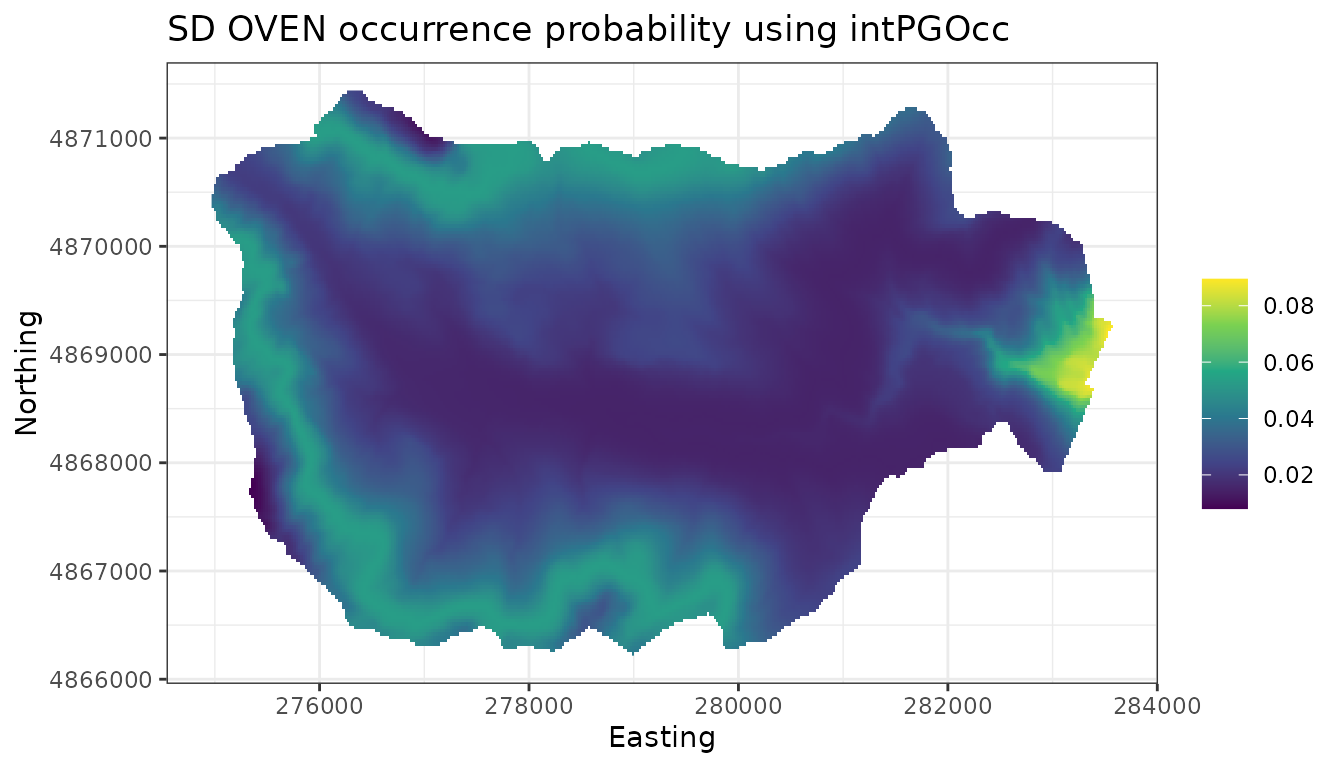

plot.dat <- data.frame(x = hbefElev$Easting,

y = hbefElev$Northing,

mean.psi = apply(out.pred$psi.0.samples, 2, mean),

sd.psi = apply(out.pred$psi.0.samples, 2, sd),

stringsAsFactors = FALSE)

# Make a species distribution map showing the point estimates,

# or predictions (posterior means)

dat.stars <- st_as_stars(plot.dat, dims = c('x', 'y'))

ggplot() +

geom_stars(data = dat.stars, aes(x = x, y = y, fill = mean.psi)) +

scale_fill_viridis_c(na.value = 'transparent') +

labs(x = 'Easting', y = 'Northing', fill = '',

title = 'Mean OVEN occurrence probability') +

theme_bw()

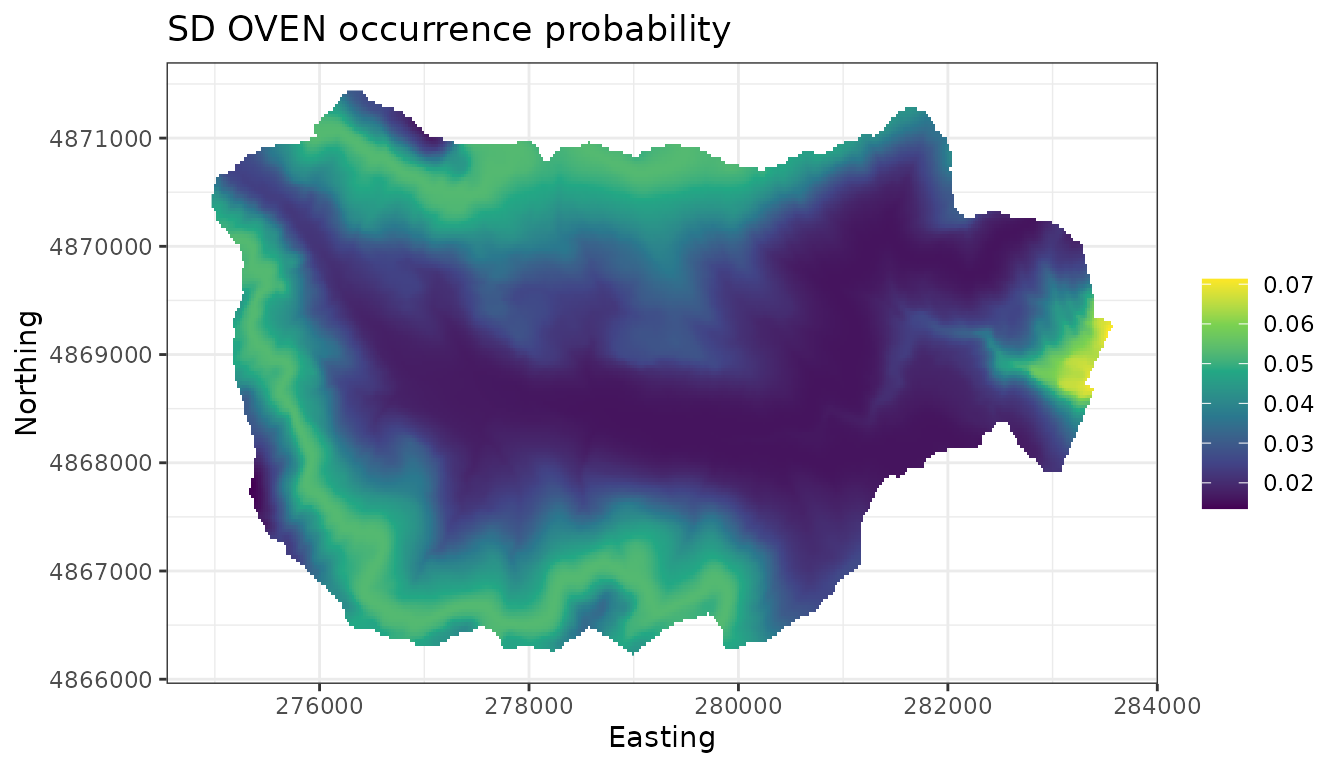

# Map of the associated uncertainty of these predictions

# (i.e., posterior sds)

ggplot() +

geom_stars(data = dat.stars, aes(x = x, y = y, fill = sd.psi)) +

scale_fill_viridis_c(na.value = 'transparent') +

labs(x = 'Easting', y = 'Northing', fill = '',

title = 'SD OVEN occurrence probability') +

theme_bw()

Single-species spatial occupancy models

Basic model description

When working across large spatial domains, accounting for residual spatial autocorrelation in species distributions can often improve predictive performance of a model, leading to more accurate species distribution maps (Guélat and Kéry 2018; Lany et al. 2020). We here extend the basic single-species occupancy model to incorporate a spatial Gaussian Process that accounts for unexplained spatial variation in species occurrence across a region of interest. Let \(\boldsymbol{s}_j\) denote the geographical coordinates of site \(j\) for \(j = 1, \dots, J\). In all spatially-explicit models, we include \(\boldsymbol{s}_j\) directly in the notation of spatially-indexed variables to indicate the model is spatially-explicit. More specifically, the occurrence probability at site \(j\) with coordinates \(\boldsymbol{s}_j\), \(\psi(\boldsymbol{s}_j)\), now takes the form

\[\begin{equation} \text{logit}(\psi(\boldsymbol{s}_j) = \boldsymbol{x}(\boldsymbol{s}_j)^{\top}\boldsymbol{\beta} + \omega(\boldsymbol{s}_j), \end{equation}\]

where \(\omega_j\) is a realization from a zero-mean spatial Gaussian Process, i.e.,

\[\begin{equation} \boldsymbol{\omega}(\boldsymbol{s}) \sim N(\boldsymbol{0}, \boldsymbol{\Sigma}(\boldsymbol{\boldsymbol{s}, \boldsymbol{s}', \theta})). \end{equation}\]

We define \(\boldsymbol{\Sigma}(\boldsymbol{s}, \boldsymbol{s}', \boldsymbol{\theta})\) as a \(J \times J\) covariance matrix that is a function of the distances between any pair of site coordinates \(\boldsymbol{s}\) and \(\boldsymbol{s}'\) and a set of parameters \((\boldsymbol{\theta})\) that govern the spatial process. The vector \(\boldsymbol{\theta}\) is equal to \(\boldsymbol{\theta} = \{\sigma^2, \phi, \nu\}\), where \(\sigma^2\) is a spatial variance parameter, \(\phi\) is a spatial decay parameter, and \(\nu\) is a spatial smoothness parameter. \(\nu\) is only specified when using a Matern correlation function.

The detection portion of the occupancy model remains unchanged from

the non-spatial occupancy model. Single-species spatial occupancy

models, like all models in spOccupancy are fit using

Pólya-Gamma data augmentation (see MCMC sampler vignette for

details).

When the number of sites is moderately large, say 1000, the above described spatial Gaussian process model can become drastically slow as a result of the need to take the inverse of the spatial covariance matrix \(\boldsymbol{\Sigma}(\boldsymbol{s}, \boldsymbol{s}', \boldsymbol{\theta})\) at each MCMC iteration. Numerous approximation methods exist to reduce this computational cost (see Heaton et al. (2019) for an overview and comparison of multiple methods). One attractive approach is the Nearest Neighbor Gaussian Process (NNGP; Datta et al. (2016)). Instead of modeling the spatial process using a full Gaussian Process, we replace the Gaussian Process prior specification with a NNGP, which leads to drastic improvements of run time with nearly identical inference and prediction as the full Gaussian Process specification. See Datta et al. (2016), Finley et al. (2019), and the MCMC sampler vignette for additional statistical details on NNGPs and their implementation in spatial occupancy models.

Fitting single-species spatial occupancy models with

spPGOcc()

The function spPGOcc() fits single-species spatial

occupancy models using Pólya-Gamma latent variables, where spatial

autocorrelation is accounted for using a spatial Gaussian Process.

spPGOcc() fits spatial occupancy models using either a full

Gaussian process or an NNGP. See Finley, Datta,

and Banerjee (2020) for details on using NNGPs with Pólya-Gamma

latent variables.

We will fit the same occupancy model for OVEN that we fit previously

using PGOcc(), but we will now make the model spatially

explicit by incorporating a spatial process with spPGOcc().

First, let’s take a look at the arguments for

spPGOcc():

spPGOcc(occ.formula, det.formula, data, inits, n.batch,

batch.length, accept.rate = 0.43, priors,

cov.model = "exponential", tuning, n.omp.threads = 1,

verbose = TRUE, NNGP = FALSE, n.neighbors = 15,

search.type = "cb", n.report = 100,

n.burn = round(.10 * n.batch * batch.length),

n.thin = 1, n.chains = 1, k.fold, k.fold.threads = 1,

k.fold.seed = 100, k.fold.only = FALSE, ...)We will walk through each of the arguments to spPGOcc()

in the context of our Ovenbird example. The occurrence

(occ.formula) and detection (det.formula)

formulas, as well as the list of data (data), take the same

form as we saw in PGOcc, with random intercepts allowed in

both the occurrence and detection models. Notice the coords

matrix in the ovenHBEF list of data. We did not use this

for PGOcc() but specifying the spatial coordinates in

data is required for all spatially explicit models in

spOccupancy.

oven.occ.formula <- ~ scale(Elevation) + I(scale(Elevation)^2)

oven.det.formula <- ~ scale(day) + scale(tod) + I(scale(day)^2)

str(ovenHBEF) # coords is required for spPGOcc.List of 4

$ y : num [1:373, 1:3] 1 1 0 1 0 0 1 0 1 1 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:373] "1" "2" "3" "4" ...

.. ..$ : chr [1:3] "1" "2" "3"

$ occ.covs: num [1:373, 1] 475 494 546 587 588 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : NULL

.. ..$ : chr "Elevation"

$ det.covs:List of 2

..$ day: num [1:373, 1:3] 156 156 156 156 156 156 156 156 156 156 ...

..$ tod: num [1:373, 1:3] 330 346 369 386 409 425 447 463 482 499 ...

$ coords : num [1:373, 1:2] 280000 280000 280000 280001 280000 ...

..- attr(*, "dimnames")=List of 2

.. ..$ : chr [1:373] "1" "2" "3" "4" ...

.. ..$ : chr [1:2] "X" "Y"The initial values (inits) are again specified in a

list. Valid tags for initial values now additionally include the

parameters associated with the spatial random effects. These include:

sigma.sq (spatial variance parameter), phi

(spatial range parameter), w (the latent spatial random

effects at each site), and nu (spatial smoothness

parameter), where the latter is only specified if adopting a Matern

covariance function (i.e., cov.model = 'matern').

spOccupancy supports four spatial covariance models

(exponential, spherical,

gaussian, and matern), which are specified in

the cov.model argument. Throughout this vignette, we will

use an exponential covariance model, which we often use as our default

covariance model when fitting spatially-explicit models and is commonly

used throughout ecology. To determine which covariance function to use,

we can fit models with the different covariance functions and compare

them using WAIC or k-fold cross-validation to select the best performing

function. We will note that the Matern covariance function has the

additional spatial smoothness parameter \(\nu\) and thus can often be more flexible

than the other functions. However, because we need to estimate an

additional parameter, this also tends to require more data (i.e., a

larger number of sites) than the other covariance functions, and so we

encourage use of the three simpler functions if your data set is sparse.

We note that model estimates are generally fairly robust to the

different covariance functions, although certain functions may provide

substantially better estimates depending on the specific form of the

underlying spatial autocorrelation in the data. For example, the

Gaussian covariance function is often useful for accounting for spatial

autocorrelation that is very smooth (i.e., long range spatial

dependence). See Chapter 2 in Banerjee, Carlin,

and Gelfand (2003) for a more thorough discussion of these

functions and their mathematical properties.

The default initial values for phi,

sigma.sq, and nu are all set to random values

from the prior distribution, which generally will be sufficient for the

models discussed in this vignette. In all spatially-explicit models

described in this vignette, the spatial range parameter phi

is the most sensitive to initial values. In general, the spatial range

parameter will often have poor mixing and take longer to converge than

the rest of the parameters in the model, so specifying an initial value

that is reasonably close to the resulting value can really help decrease

run times for complicated models. As an initial value for the spatial

range parameter phi, we compute the mean distance between

points in HBEF and then set it equal to 3 divided by this mean distance.

When using an exponential covariance function, \(\frac{3}{\phi}\) is the effective range, or

the distance at which the residual spatial correlation between two sites

drops to 0.05 (Banerjee, Carlin, and Gelfand

2003). Thus our initial guess for this effective range is the

average distance between sites across HBEF. As with all other

parameters, we generally recommend using the default initial values for

an initial model run, and if the model is taking a very long time to

converge you can rerun the model with initial values based on the

posterior means of estimated parameters from the initial model fit. For

the spatial variance parameter sigma.sq, we set the initial

value to 2. This corresponds to a fairly small spatial variance, which

we expect based on our previous work with this data set. Further, we set

the initial values of the latent spatial random effects at each site to

0. The initial values for these random effects has an extremely small

influence on the model results, so we generally recommend setting their

initial values to 0 as we have done here (this is also the default).

However, if you are running your model for a very long time and are

seeing very slow convergence of the MCMC chains, setting the initial

values of the spatial random effects to the mean estimates from a

previous run of the model could help reach convergence faster.

# Pair-wise distances between all sites

dist.hbef <- dist(ovenHBEF$coords)

# Exponential covariance model

cov.model <- "exponential"

# Specify list of inits

oven.inits <- list(alpha = 0,

beta = 0,

z = apply(ovenHBEF$y, 1, max, na.rm = TRUE),

sigma.sq = 2,

phi = 3 / mean(dist.hbef),

w = rep(0, nrow(ovenHBEF$y)))The next three arguments (n.batch,

batch.length, and accept.rate) are all related

to the specific type of MCMC sampler we use when we fit the model. The

spatial range parameter (and the spatial smoothness parameter if

cov.model = 'matern') are the two hardest parameters to

estimate in spatially-explicit models in spOccupancy. In

other words, you will often see slow mixing and convergence of the MCMC

chains for these parameters. To try to speed up this slow mixing and

convergence of these parameters, we use an algorithm called an adaptive

Metropolis-Hastings algorithm for all spatially-explicit models in

spOccupancy (see Roberts and

Rosenthal (2009) for more details on this algorithm). In this

approach, we break up the total number of MCMC samples into a set of

“batches”, where each batch has a specific number of MCMC samples. When

we fit a spatially-explicit model in spOccupancy, instead

of specifying the total number of MCMC samples in the

n.samples argument like we did in PGOcc(), we

must specify the total number of batches (n.batch) as well

as the number of MCMC samples each batch contains. Thus, the total

number of MCMC samples is n.batch * batch.length.

Typically, we set batch.length = 25 and then play around

with n.batch until convergence of all model parameters is

reached. We recommend setting batch.length = 25 unless you

have a specific reason to change it. Here we set

n.batch = 400 for a total of 10,000 MCMC samples in each of

3 chains. We additionally specify a burn-in period of length 2000 and a

thinning rate of 20.

batch.length <- 25

n.batch <- 400

n.burn <- 2000

n.thin <- 20

n.chains <- 3Importantly, we also need to specify an acceptance rate and a tuning

parameter for the spatial range parameter (and spatial smoothness

parameter if cov.model = 'matern'), which are both features

of the adaptive algorithm we use to sample these parameters. In this

algorithm, we propose new values for phi (and

nu), compare them to our previous values, and use a

statistical algorithm to determine if we should accept the new proposed

value or keep the old one. The accept.rate argument

specifies the ideal proportion of times we will accept the newly

proposed values for these parameters. Roberts and

Rosenthal (2009) show that if we accept new values around 43% of

the time, this will lead to optimal mixing and convergence of the MCMC

chains. Following these recommendations, we should strive for an

algorithm that accepts new values about 43% of the time. Thus, we

recommend setting accept.rate = 0.43 unless you have a

specific reason not to (this is the default value). The values specified

in the tuning argument helps control the initial values we

will propose for both phi and nu. These values

are supplied as input in the form of a list with tags phi

and nu. The initial tuning value can be any value greater

than 0, but we generally recommend starting the value out around 0.5.

This initial tuning value is closely related to the ideal (or target)

acceptance rate we specified in accept.rate. In short, the

algorithm we use is “adaptive” because the algorithm will change the

tuning values after each batch of the MCMC to yield acceptance rates

that are close to our target acceptance rate that we specified in the

accept.rate argument. Information on the acceptance rates

for phi and nu in your model will be displayed

when setting verbose = TRUE. After some initial runs of the

model, if you notice the final acceptance rate is much larger or smaller

than the target acceptance rate (accept.rate), you can then

change the initial tuning value to get closer to the target rate. While

use of this algorithm requires us to specify more arguments in

spatially-explicit models, this leads to much shorter run times compared

to a more simple approach where we do not have an “adaptive” sampling

approach, and it should thus save you time in the long haul when waiting

for these models to run. For our example here, we set the initial tuning

value to 1 after some initial exploratory runs of the model.

oven.tuning <- list(phi = 1)

# accept.rate = 0.43 by default, so we do not specify it.Priors are again specified in a list in the argument

priors. We follow standard recommendations for prior

distributions from the spatial statistics literature (Banerjee, Carlin, and Gelfand 2003). We assume

an inverse gamma prior for the spatial variance parameter

sigma.sq (the tag of which is sigma.sq.ig),

and uniform priors for the spatial decay parameter phi and

smoothness parameter nu (if using the Matern correlation

function), with the associated tags phi.unif and

nu.unif. The hyperparameters of the inverse Gamma are

passed as a vector of length two, with the first and second elements

corresponding to the shape and scale, respectively. The lower and upper

bounds of the uniform distribution are passed as a two-element vector

for the uniform priors.

As of v0.3.2, we allow users to specify a uniform prior on the

spatial variance parameter sigma.sq (using the tag

sigma.sq.unif) instead of an inverse-Gamma prior. This can

be useful in certain situations when working with a binary response

variable (which we inherently do in occupancy models), as there is a

confounding between the spatial variance parameter sigma.sq

and the occurrence intercept. This occurs as a result of the logit

transformation and a mathematical statement known as Jensen’s Inequality

(Bolker 2015). Briefly, Jensen’s

Inequality tells us that while the spatial random effects don’t

influence the mean on the logit scale (since we give them a prior with

mean 0), they do have an influence on the mean on the actual probability

scale (after back-transforming), which is what leads to potential

confounding between the spatial variance parameter and the occurrence

intercept. Generally, we have found this confounding to be

inconsequential, as the spatial structure of the random effects helps to

separate the spatial variance from the occurrence intercept. However,

there may be certain circumstances when \(\sigma^2\) is estimated to be extremely

large, and the estimate of the occurrence intercept is a very large

magnitude negative number in order to compensate. It can be helpful in

these situations to use a uniform distribution on sigma.sq

to restrict it to taking more reasonable values. We have rarely

encountered such situations, and so throughout this vignette (and in our

the vast majority of our analyses) we will use an inverse-Gamma

prior.

Note that the priors for the spatial parameters in a

spatially-explicit model must be at least weakly informative for the

model to converge (Banerjee, Carlin, and Gelfand

2003). For the inverse-Gamma prior on the spatial variance, we

typically set the shape parameter to 2 and the scale parameter equal to

our best guess of the spatial variance. The default prior hyperparameter

values for the spatial variance \(\sigma^2\) are a shape parameter of 2 and a

scale parameter of 1. This weakly informative prior suggests a prior

mean of 1 for the spatial variance, which is a moderately small amount

of spatial variation. Based on our previous work with these data, we

expected the residual spatial variation to be relatively small, and so

we use this default value and set the scale parameter below to 1. For

the spatial decay parameter, our default approach is to set the lower

and upper bounds of the uniform prior based on the minimum and maximum

distances between sites in the data. More specifically, by default we

set the lower bound to 3 / max and the upper bound to

3 / min, where min and max are

the minimum and maximum distances between sites in the data set,

respectively. This equates to a vague prior that states the spatial

autocorrelation in the data could only exist between sites that are very

close together, or could span across the entire observed study area. If

additional information is known on the extent of the spatial

autocorrelation in the data, you may place more restrictive bounds on

the uniform prior, which would reduce the amount of time needed for

adequate mixing and convergence of the MCMC chains. Here we use this

default approach, but will explicitly set the values for

transparency.

min.dist <- min(dist.hbef)

max.dist <- max(dist.hbef)

oven.priors <- list(beta.normal = list(mean = 0, var = 2.72),

alpha.normal = list(mean = 0, var = 2.72),

sigma.sq.ig = c(2, 1),

phi.unif = c(3/max.dist, 3/min.dist))The argument n.omp.threads specifies the number of

threads to use for within-chain parallelization, while

verbose specifies whether or not to print the progress of

the sampler. We highly recommend setting

verbose = TRUE for all spatial models to ensure the

adaptive MCMC is working as you want (and this is the reason for why

this is the default for this argument). The argument

n.report specifies the interval to report the

Metropolis-Hastings sampler acceptance rate. Note that

n.report is specified in terms of batches, not the overall

number of samples. Below we set n.report = 100, which will

result in information on the acceptance rate and tuning parameters every

100th batch (not sample).

n.omp.threads <- 1

verbose <- TRUE

n.report <- 100 # Report progress at every 100th batch.The parameters NNGP, n.neighbors, and

search.type relate to whether or not you want to fit the

model with a Gaussian Process or with NNGP, which is a much more

computationally efficient approximation. The argument NNGP

is a logical value indicating whether to fit the model with an NNGP

(TRUE) or a regular Gaussian Process (FALSE).

For data sets that have more than 1000 locations, using an NNGP will

have substantial improvements in run time. Even for more modest-sized

data sets, like the HBEF data set, using an NNGP will be quite a bit

faster (especially for multi-species models as shown in subsequent

sections). Unless your data set is particularly small (e.g., 100 points)

and you are concerned about the NNGP approximation, we recommend setting

NNGP = TRUE, which is the default. The arguments

n.neighbors and search.type specify the number

of neighbors used in the NNGP and the nearest neighbor search algorithm,

respectively, to use for the NNGP model. Generally, the default values

of these arguments will be adequate. Datta et al.

(2016) showed that setting n.neighbors = 15 is

usually sufficient, although for certain data sets a good approximation

can be achieved with as few as five neighbors, which could substantially

decrease run time for the model. We generally recommend leaving

search.type = "cb", as this results in a fast code book

nearest neighbor search algorithm. However, details on when you may want

to change this are described in Finley, Datta,

and Banerjee (2020). We will run an NNGP model using the default

value for search.type and setting

n.neighbors = 5, which we have found in exploratory

analysis to closely approximate a full Gaussian Process.

We now fit the model (without k-fold cross-validation) and summarize

the results using summary().

# Approx. run time: < 1 minute

out.sp <- spPGOcc(occ.formula = oven.occ.formula,

det.formula = oven.det.formula,

data = ovenHBEF,

inits = oven.inits,

n.batch = n.batch,

batch.length = batch.length,

priors = oven.priors,

cov.model = cov.model,

NNGP = TRUE,

n.neighbors = 5,

tuning = oven.tuning,

n.report = n.report,

n.burn = n.burn,

n.thin = n.thin,

n.chains = n.chains)----------------------------------------

Preparing to run the model

----------------------------------------

----------------------------------------

Building the neighbor list

----------------------------------------

----------------------------------------

Building the neighbors of neighbors list

----------------------------------------

----------------------------------------

Model description

----------------------------------------

NNGP Spatial Occupancy model with Polya-Gamma latent

variable fit with 373 sites.

Samples per chain: 10000 (400 batches of length 25)

Burn-in: 2000

Thinning Rate: 20

Number of Chains: 3

Total Posterior Samples: 1200

Using the exponential spatial correlation model.

Using 5 nearest neighbors.

Source compiled with OpenMP support and model fit using 1 thread(s).

Adaptive Metropolis with target acceptance rate: 43.0

----------------------------------------

Chain 1

----------------------------------------

Sampling ...

Batch: 100 of 400, 25.00%

Parameter Acceptance Tuning

phi 44.0 0.40252

-------------------------------------------------

Batch: 200 of 400, 50.00%

Parameter Acceptance Tuning

phi 40.0 0.28650

-------------------------------------------------

Batch: 300 of 400, 75.00%

Parameter Acceptance Tuning

phi 60.0 0.31664

-------------------------------------------------

Batch: 400 of 400, 100.00%

----------------------------------------

Chain 2

----------------------------------------

Sampling ...

Batch: 100 of 400, 25.00%

Parameter Acceptance Tuning

phi 56.0 0.29229

-------------------------------------------------

Batch: 200 of 400, 50.00%

Parameter Acceptance Tuning

phi 48.0 0.37158

-------------------------------------------------

Batch: 300 of 400, 75.00%

Parameter Acceptance Tuning

phi 40.0 0.29229

-------------------------------------------------

Batch: 400 of 400, 100.00%

----------------------------------------

Chain 3

----------------------------------------

Sampling ...

Batch: 100 of 400, 25.00%

Parameter Acceptance Tuning

phi 56.0 0.29229

-------------------------------------------------

Batch: 200 of 400, 50.00%

Parameter Acceptance Tuning

phi 44.0 0.31664

-------------------------------------------------

Batch: 300 of 400, 75.00%

Parameter Acceptance Tuning

phi 32.0 0.34301

-------------------------------------------------

Batch: 400 of 400, 100.00%

class(out.sp) # Look at what spOccupancy produced.[1] "spPGOcc"

names(out.sp) [1] "rhat" "beta.samples" "alpha.samples" "theta.samples"

[5] "coords" "z.samples" "X" "X.re"

[9] "w.samples" "psi.samples" "like.samples" "X.p"

[13] "X.p.re" "y" "ESS" "call"

[17] "n.samples" "n.neighbors" "cov.model.indx" "type"

[21] "n.post" "n.thin" "n.burn" "n.chains"

[25] "pRE" "psiRE" "run.time"

summary(out.sp)

Call:

spPGOcc(occ.formula = oven.occ.formula, det.formula = oven.det.formula,

data = ovenHBEF, inits = oven.inits, priors = oven.priors,

tuning = oven.tuning, cov.model = cov.model, NNGP = TRUE,

n.neighbors = 5, n.batch = n.batch, batch.length = batch.length,

n.report = n.report, n.burn = n.burn, n.thin = n.thin, n.chains = n.chains)

Samples per Chain: 10000

Burn-in: 2000

Thinning Rate: 20

Number of Chains: 3

Total Posterior Samples: 1200

Run Time (min): 0.5227

Occurrence (logit scale):

Mean SD 2.5% 50% 97.5% Rhat ESS

(Intercept) 2.8451 0.7525 1.2774 2.8688 4.3535 1.0169 148

scale(Elevation) -2.4890 0.5974 -3.8620 -2.4186 -1.5604 1.0630 194

I(scale(Elevation)^2) -0.6868 0.3364 -1.3461 -0.6853 0.0027 1.0142 882

Detection (logit scale):

Mean SD 2.5% 50% 97.5% Rhat ESS

(Intercept) 0.8201 0.1219 0.5760 0.8161 1.0565 1.0081 1200

scale(day) -0.0916 0.0786 -0.2511 -0.0913 0.0674 1.0094 1200

scale(tod) -0.0465 0.0773 -0.1956 -0.0485 0.1061 1.0071 1397

I(scale(day)^2) 0.0262 0.0948 -0.1540 0.0272 0.2108 1.0022 1235

Spatial Covariance:

Mean SD 2.5% 50% 97.5% Rhat ESS

sigma.sq 5.1171 3.3485 1.1532 4.2911 13.8545 1.0696 100

phi 0.0020 0.0008 0.0007 0.0019 0.0039 1.0158 160Most Rhat values are less than 1.1, although for a full analysis we

should run the model longer to ensure the spatial variance parameter

(sigma.sq) has converged. The effective sample sizes of the

spatial covariance parameters are somewhat low, which indicates limited

mixing of these parameters. This is common when fitting spatial models.

We note that there is substantial MC error in the spatial covariance

parameters, and so your estimates may be slightly different from what we

have shown here. We see spPGOcc() returns a list of class

spPGOcc and consists of posterior samples for all

parameters. Note that posterior samples for spatial parameters are

stored in the list element theta.samples.

Posterior predictive checks

For our posterior predictive check, we send the spPGOcc

model object to the ppcOcc() function, this time grouping

by replicate, or survey occasion, (group = 2) instead of by

site (group = 1).

Call:

ppcOcc(object = out.sp, fit.stat = "freeman-tukey", group = 2)

Samples per Chain: 10000

Burn-in: 2000

Thinning Rate: 20

Number of Chains: 3

Total Posterior Samples: 1200

Bayesian p-value: 0.3625

Fit statistic: freeman-tukey The Bayesian p-value indicates adequate fit of the spatial occupancy model.

Model selection using WAIC and k-fold cross-validation

We next use the waicOcc() function to compute the WAIC,

which we can compare to the non-spatial model to assess the benefit of

incorporating the spatial random effects in the occurrence model.

waicOcc(out.sp) elpd pD WAIC

-570.18590 45.58676 1231.54533

# Compare to non-spatial model

waicOcc(out) elpd pD WAIC

-632.960543 6.290149 1278.501382 We see the WAIC value for the spatial model is substantially reduced compared to the nonspatial model, indicating that incorporation of the spatial random effects yields an improvement in predictive performance.

k-fold cross-validation is accomplished by specifying the

k.fold argument in spPGOcc just as we saw in

PGOcc.

Prediction

Finally, we can perform out of sample prediction using the

predict function just as before. Prediction for spatial

models is more computationally intensive than for non-spatial models,

and so the predict function for spPGOcc class

objects also has options for parallelization

(n.omp.threads) and reporting sampler progress

(verbose and n.report). Note that for

spPGOcc(), you also need to supply the coordinates of the

out of sample prediction locations in addition to the covariate

values.

# Do prediction.

coords.0 <- as.matrix(hbefElev[, c('Easting', 'Northing')])

# Approx. run time: 6 min

out.sp.pred <- predict(out.sp, X.0, coords.0, verbose = FALSE)

# Produce a species distribution map (posterior predictive means of occupancy)

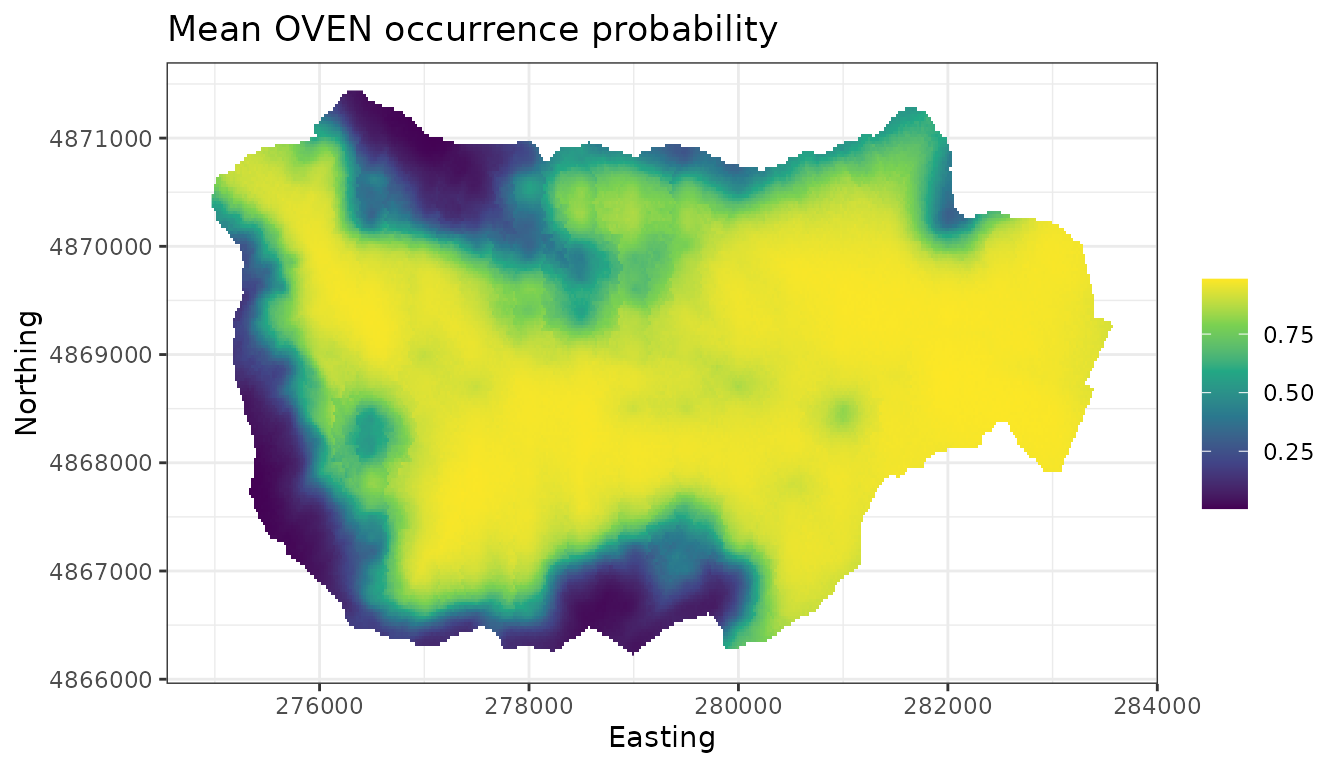

plot.dat <- data.frame(x = hbefElev$Easting,

y = hbefElev$Northing,

mean.psi = apply(out.sp.pred$psi.0.samples, 2, mean),

sd.psi = apply(out.sp.pred$psi.0.samples, 2, sd))

dat.stars <- st_as_stars(plot.dat, dims = c('x', 'y'))

ggplot() +

geom_stars(data = dat.stars, aes(x = x, y = y, fill = mean.psi)) +

scale_fill_viridis_c(na.value = 'transparent') +

labs(x = 'Easting', y = 'Northing', fill = '',

title = 'Mean OVEN occurrence probability') +

theme_bw()

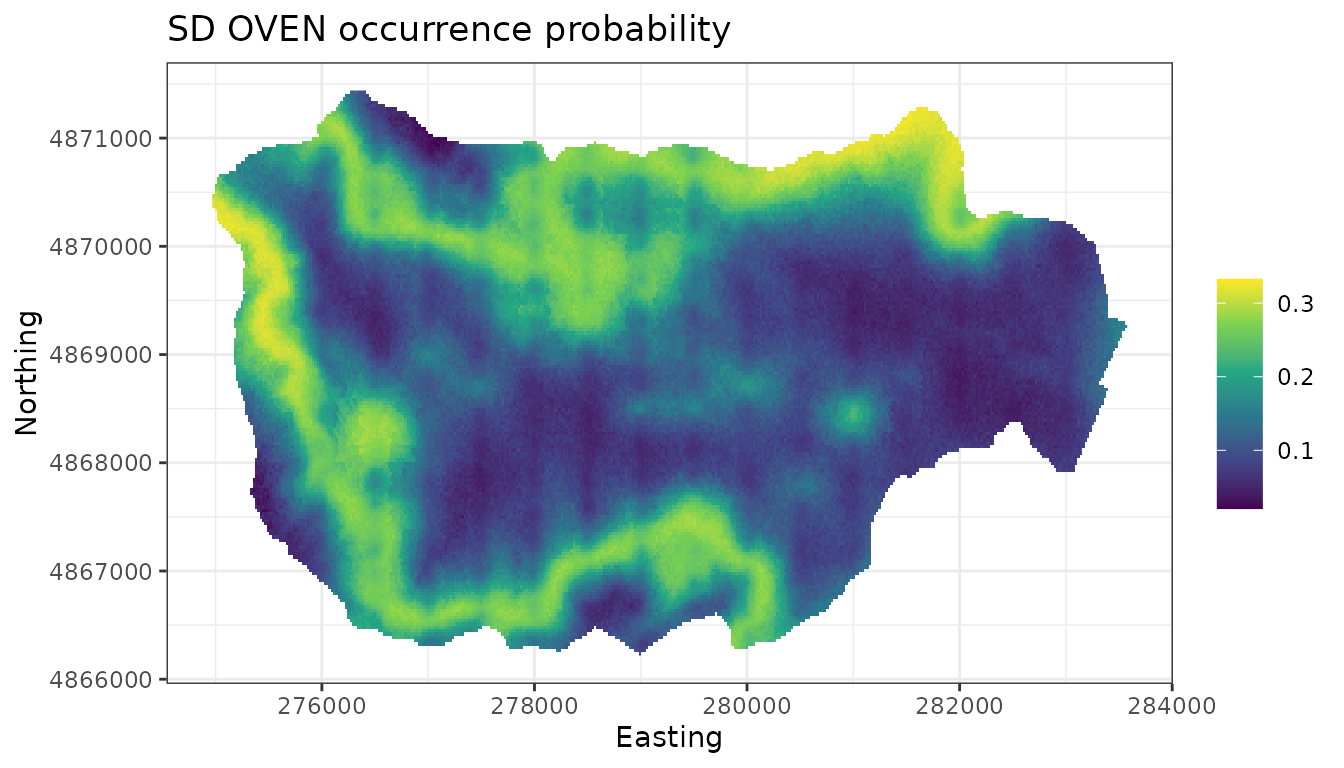

# Produce an uncertainty map of the SDM (posterior predictive SDs of occupancy)

ggplot() +

geom_stars(data = dat.stars, aes(x = x, y = y, fill = sd.psi)) +

scale_fill_viridis_c(na.value = 'transparent') +

labs(x = 'Easting', y = 'Northing', fill = '',

title = 'SD OVEN occurrence probability') +

theme_bw()

Comparing this to the non-spatial occupancy model, the spatial model appears to identify areas in HBEF with low OVEN occurrence that are not captured in the non-spatial model. We will resist trying to hypothesize what environmental factors could lead to these patterns.

Multi-species occupancy models

Basic model description

Let \(z_{i, j}\) be the true presence (1) or absence (0) of a species \(i\) at site \(j\), with \(j = 1, \dots, J\) and \(i = 1, \dots, N\). We assume the latent occurrence variable arises from a Bernoulli process following

\[\begin{equation} \begin{split} &z_{i, j} \sim \text{Bernoulli}(\psi_{i, j}), \\ &\text{logit}(\psi_{i, j}) = \boldsymbol{x}^{\top}_{j}\boldsymbol{\beta}_i, \end{split} \end{equation}\]